Definition of Network Topology Architecture

Modern network topology is one of the most difficult things to define. This is because the needs and requirements of enterprises differ. Even within the same enterprise, the needs, and requirements may also fluctuant. Tailoring a network design that an enterprise will trust demands not only technical expertise but also years of practical experience on the part of the network engineer.

So, we will state again that, this is not a tutorial, these topics where covered in detail in Jeremy IT Lab LAN Architectures, Jeremy IT Lab Virtualization & Cloud and Jeremy IT Lab WAN Architectures (you will definitely see some of his wordings here because I have listened to him a lot over the last two years).

According to Cisco, network topology is

… used to describe the physical and logical structure of a network. It maps the way different nodes on a network — including switches and routers — are placed and interconnected, as well as how data flows. Diagramming the locations of endpoints and service requirements helps determine the best placement for each node to optimize traffic flows.

In the design of network topologies, the architecture chosen is never arbitrary. This is because these deployments can be resource intensive; especially on the financial side; however it is shaped directly by the specific needs of the enterprise. These needs differ significantly depending on the size of the organization, its departments, its geographic distribution, available budget, and the regulatory or compliance environment in which it operates. At the top of these considerations are four core drivers, stability, speed, scalability, and security.

Technical Definitions

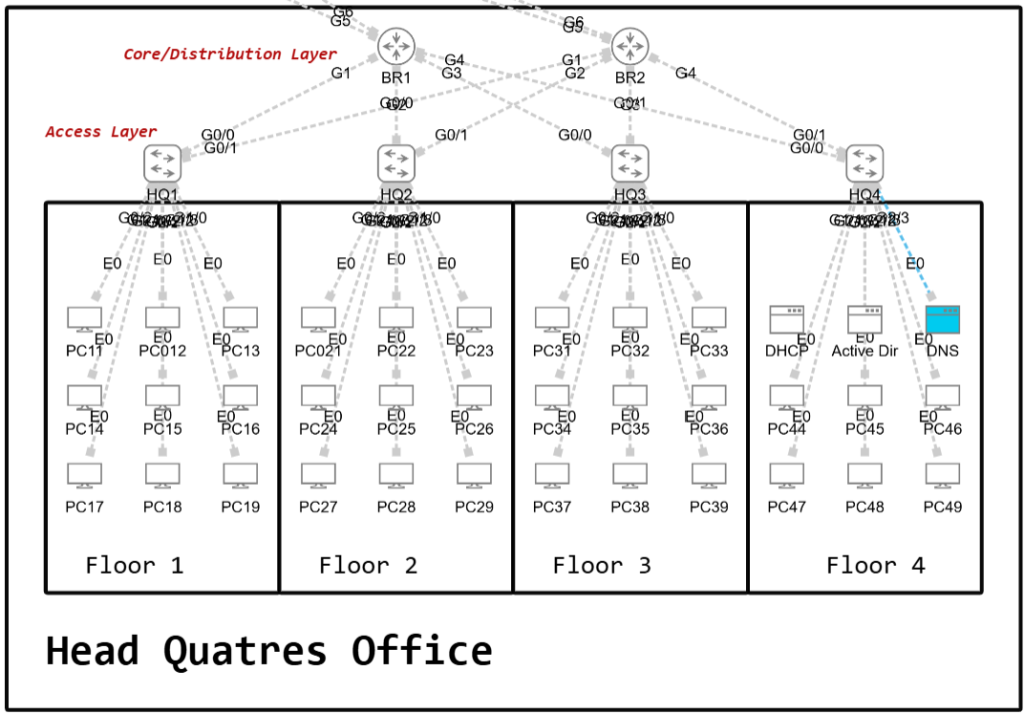

1.2.a Two-tier

The two-tier architecture, also known as a collapsed core design, consists of two hierarchical layers: the access layer and the core/distribution layer. It omits the dedicated center layer found in a three-tier design, making it a simpler and more cost-effective option.

At the access layer, end devices such as servers, desktop computers, wireless LAN controllers, access points, IoT devices, IP phones, and printers connect directly to the access switches located on each floor. These switches provide the first point of connectivity and aggregation for users and devices. They usually have comparatively higher port density and often provides features such as Power over Ethernet (PoE), port security, Dynamic ARP Inspection (DAI), and Quality of Service (QoS) marking to prioritize critical traffic.

Traffic from the access switches is forwarded upward to the distribution layer, which aggregates connections from all access layer switches. The distribution layer is where we configure network policies such as Access Control Lists (ACLs), redundancy protocols like HSRP or VRRP, and provides uplinks to external services including the WAN, internet, or enterprise data centers.

The trade-off, however, is that performance can be limited. When Layer 2 switches are used at the access layer, there will be redundant links blocked by the Spanning Tree Protocol (STP), reducing overall efficiency. By contrast, deploying Layer 3 switches or routers at the access layer allows the use of Equal-Cost Multi-Path (ECMP), enabling multiple active paths and improving throughput. This makes the design more resilient and better suited to larger enterprise branches, where redundancy and traffic handling are critical. Another advantage is that the access and distribution layers can be expanded independently, allowing the network to scale in a more flexible way to meet growing business demands.

The switches used at the access and distribution layers in a two-tier network are generally simpler and less expensive and does not come with core application centric functions. This makes them easier to manage, more cost-effective to deploy, and well suited to locations where only enterprise grade connectivity and aggregation are required. In many cases, organizations adopt a two-tier model not just to save money but because it matches the exact operational needs of the site its being deployed to, whether it be a small headquarters, a branch office, or a retail environment.

1.2.b Three-tier

The three-tier LAN design is built around three hierarchical layers: the access layer, the distribution layer, and the core layer. This model is widely used in large enterprises, universities, and organizations with multiple buildings or departments because it delivers scalability, resilience, and performance. Cisco generally recommends introducing a core layer when a network has more than three distribution layers within a single location.

At the access layer, end devices such as computers, printers, IP phones, cameras, IoT devices, and wireless access points connect to access switches. These switches provide user connectivity, enforce port-level security, support Power over Ethernet (PoE) for IP phones and access points, and apply basic Quality of Service (QoS) marking.

The distribution layer sits above the access switches and aggregates their connections. It is the boundary between Layer 2 and Layer 3, where inter-VLAN routing is performed, and policies such as Access Control Lists (ACLs), route filtering, and redundancy protocols (HSRP, VRRP, GLBP) are applied. This layer also provides connectivity to external services such as WAN and data centers, ensuring that departmental traffic flows are efficiently managed.

The core layer functions as the high-speed backbone of the network. Its primary purpose is fast and resilient transport between distribution blocks, avoiding CPU-intensive tasks such as packet filtering or QoS classification. All connections at this layer are Layer 3, which eliminates reliance on Spanning Tree Protocol (STP) and ensures continuous connectivity even if devices fail. By keeping the core focused purely on speed and redundancy, the network achieves stability and high availability at scale.

The 3 layer architecture offers several key benefits. It is highly scalable, allowing new access and distribution blocks to be added as the enterprise grows. It is reliable, with redundancy at multiple levels so the network remains operational even if one component fails. It also delivers better performance, as the core layer provides dedicated bandwidth and reduces congestion between departments or sites.

The trade-offs are increased cost and complexity, since the design requires very high-performance hardware, more interconnections, and greater expertise to deploy and maintain. For this reason, the three-tier model is most appropriate for large enterprises, universities, and organizations that require secure, high-speed connectivity across multiple sites and departments with room to grow.

NB: For smaller LANs, two tiers is sufficient, but as the size goes beyond the vendor requirement given by Cisco, you have to scale to a 3 tier for operational and management efficiency.

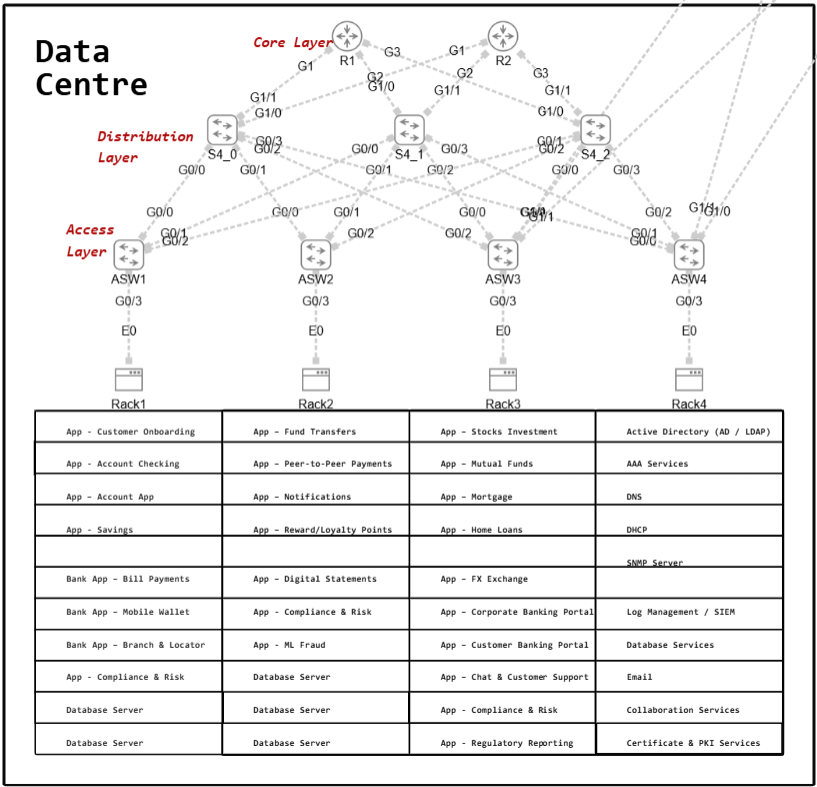

1.2.c Spine-leaf

This design was developed in respect to data center operations. Hence, we can say that is a data-center design. They are the foundation of what Cisco refers to as Massively Scalable Data Center Network Fabric (MSDC) designs. According to Cisco ….

Massively Scalable Data Centers (MSDCs) are large data centers, with thousands of physical servers (sometimes hundreds of thousands), that have been designed to scale in size and computing capacity with little impact on the existing infrastructure. Environments of this scale have a unique set of network requirements, with an emphasis on application performance, network simplicity and stability, visibility, easy troubleshooting, and easy life-cycle management, etc. Examples of MSDCs are large web/cloud providers that host large distributed applications such as social media, e-commerce, gaming, Software as a Service (SaaS), Artificial Intelligence and Machine Learning (AI/ML) workloads, etc. These large web/cloud providers are often also referred to as hyper-scalers or cloud titans.

The central focus of modern data center design is application performance. Today’s applications are built on modular deployments and microservices, where numerous smaller components must constantly interact across different physical servers. This generates heavy east-west traffic within the data center, far exceeding traditional north-south flows between users and the internet.

Modern networks must be able to scale compute instances up or down on demand and support fast failover in case of bottlenecks or failures. Redundancy at the switching and routing layers ensures workloads can reroute instantly, a necessity for industries like finance, healthcare, and e-commerce where downtime is costly. Virtualization platforms such as Hyper-V and VMware ESXi further enhance resiliency, with features like vMotion enabling live migration of workloads without service disruption.

Segmentation is equally vital. Technologies such as VLANs, VRFs, VXLANs, and micro-segmentation isolate tenants and microservices on shared infrastructure, maintaining both security and predictable performance.

At the heart of this ecosystem is the spine-leaf fabric, designed for scalability, resiliency, and efficiency. Each leaf connects to every spine, creating a fully meshed network with predictable latency, uniform bandwidth, and multiple active paths. Unlike hierarchical designs, there are no lateral leaf-to-leaf or spine-to-spine links. End hosts connect only to leaves, ensuring consistency: every server is the same number of hops away from every other.

External connectivity is provided through edge or boundary nodes, which allow controlled traffic to flow beyond the fabric. Security and segmentation are enforced using ACLs, VLAN/VRF boundaries, and VXLAN overlays, while routing protocols such as OSPF and BGP manage internal and external route propagation. This preserves both the integrity and efficiency of the fabric, enabling seamless and secure communication across modern enterprise and hyperscale data centers.

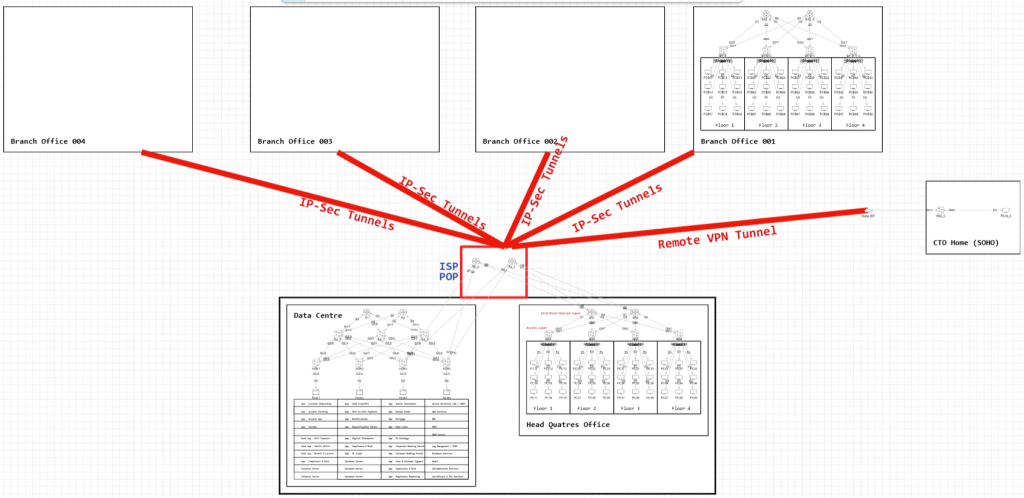

1.2.d Wide Area Network

A Wide Area Network (WAN) is a network that spans large geographic areas and connects multiple Local Area Networks (LANs). WANs are used by enterprises to link offices, data centers, and remote sites together. While the Internet itself can be seen as a WAN, the term is usually applied to private enterprise connections. In situations where traffic must move over public infrastructure like the Internet, VPNs are used to build secure, private WAN connections. Depending on geography, different WAN technologies are available, and what is considered “legacy” in one region may still be in use elsewhere.

In practice, it is network service providers such as Bell, AT&T, and others that deliver these WAN services over what are essentially private “digital highways.” They own and operate the backbone infrastructure, and in fact, many of them are also the main deployers of spine-leaf topologies at scale to support the enormous east-west traffic patterns across their networks.

Because these backbones are shared by many customers, providers use network virtualization and segmentation to isolate traffic. This creates logically separate environments for each customer, commonly referred to as tenants. For example, twelve banks in Canada, alongside one hundred power stations, multiple insurance companies, and other organizations with WAN needs, may all run across the same physical backbone. Yet, through technologies such as VRFs, MPLS VPNs, or VXLAN overlays, each tenant remains fully isolated. No customer can view or interfere with another’s traffic, even though they are technically traversing the same infrastructure.

For enterprises, the priority is usually to manage and secure their own internal networks, while leaving the actual branch-to-branch connectivity hand-off to their service provider partners. This division of responsibility allows companies to focus on application performance, policy, and security, while relying on carriers to guarantee the underlying connectivity across cities, provinces, or even continents. Some technologies used to archive this isolation includes:

- WAN over Dedicated Connections (Leased Lines):

One of the earliest WAN technologies is the leased line, a dedicated physical link between two sites. Leased lines commonly use serial technologies with HDLC or PPP encapsulation. They are reliable but expensive, with long installation times and lower bandwidth compared to modern Ethernet WANs. For this reason, Ethernet delivered over fiber has become the more popular choice for enterprise WAN connections. In a traditional leased line setup, a hub-and-spoke topology is often used, where branch offices connect directly back to a central hub. - MPLS VPNs:

Multi-Protocol Label Switching (MPLS) is a widely adopted service provider technology that allows enterprises to connect sites over a shared backbone. Service providers’ MPLS networks are shared infrastructures, but MPLS uses label switching to create VPNs that keep customer traffic isolated. Customer Edge (CE) routers connect to Provider Edge (PE) routers, while Provider (P) routers handle the internal forwarding of labeled packets. In a Layer 3 MPLS VPN, CE routers form routing adjacencies with PE routers using protocols such as OSPF. Routes from one CE are then advertised through the provider to the CE at another site, enabling seamless communication. In contrast, a Layer 2 MPLS VPN makes the provider’s backbone transparent. The CE routers appear directly connected in the same subnet, and if a routing protocol is used, the CE routers peer with each other as if no provider network existed. MPLS can be accessed through various underlying technologies, and segmentation is achieved through VRFs that ensure customers remain isolated from one another, even though thousands of tenants may share the same physical backbone. - Internet Connectivity:

Enterprises also connect to the Internet in a variety of ways. Traditional private WAN services such as leased lines and MPLS can be used to connect to a service provider’s Internet backbone. On the other hand, consumer technologies such as DSL and cable broadband are also used by some organizations, especially for branch offices. Increasingly, fiber-optic Ethernet is becoming the standard for both enterprise and consumer Internet access because of its ability to deliver high bandwidth over long distances. Connections can be single-homed, dual-homed, or multi-homed depending on how much redundancy and fault tolerance an organization requires. - Internet VPNs:

When the Internet is used as a WAN, there is no inherent security or traffic separation. Enterprises solve this by deploying VPNs. Site-to-Site VPNs using IPsec create encrypted tunnels between two routers, allowing secure communication between two sites. The tunnel encapsulates and encrypts the original packets, protecting them as they traverse the public Internet. However, IPsec tunnels only support unicast traffic, which limits the ability to run multicast-based protocols such as OSPF. To address this, enterprises often use GRE over IPsec, which adds flexibility by supporting multicast and broadcast traffic while still maintaining encryption with IPsec. A more advanced solution is DMVPN (Dynamic Multipoint VPN), a Cisco-developed technology that simplifies configuration. Instead of manually creating a full mesh of tunnels between all sites, routers build IPsec tunnels dynamically when communication is needed. This provides the efficiency of direct spoke-to-spoke communication with the simplicity of a hub-and-spoke design. - Site-to-Site vs Remote-Access VPNs:

Site-to-site VPNs provide permanent, encrypted connectivity between two or more locations, typically using IPsec. They allow entire offices to securely exchange data across the Internet and are ideal for interconnecting branch sites, data centers, or headquarters. Remote-access VPNs, by contrast, are designed for individual users. These VPNs typically use TLS (formerly SSL), the same protocol that secures HTTPS, to create on-demand encrypted tunnels from a single device into the enterprise network. Client software such as Cisco AnyConnect, installed on laptops or mobile devices, connects securely to a corporate firewall or router acting as the VPN server. This approach is especially well-suited for employees and contractors working from home or other untrusted networks, enabling them to safely access internal company resources wherever they are.

1.2.e Small office/home office (SOHO)

A SOHO network is the setup used in small offices, home offices, or even households with only a few devices. It doesn’t have to be a formal office—any small space with internet-connected devices qualifies. Unlike enterprises that use multiple dedicated appliances, SOHO networks usually rely on a single home router that combines routing, switching, firewalling, wireless access, and sometimes modem functions. This makes them simple and affordable but also more limited in performance and security.

With the rise of remote work, SOHO networks often serve as the bridge between employees and enterprise systems. Because this traffic may cross the open internet, companies add extra layers of protection. VPN tunnels are used to encrypt communications end to end. Multi-factor authentication ensures users are properly verified. Zero Trust models demand that every request be authenticated, even within the VPN.

SOHO networks are essential for remote work and small setups, offering convenience through a single all-in-one device.

1.2.f On-premises and cloud

Cloud computing marks a major departure from traditional on-premises and co-location models. In the past, organizations had to purchase servers, networking gear, storage, and the physical environment—including space, power, and cooling—then manage it all themselves. Co-location provided some relief by renting space in third-party data centers, but responsibility for equipment upkeep still remained with the customer. The rise of cloud computing transformed this model by enabling IT resources to be consumed as services, rather than owned and operated directly.

In modern times, the default deployment model in practice is hybrid. Even organizations that maintain significant on-premises infrastructure are, at a minimum, using cloud services such as Microsoft 365, making “pure on-prem” environments increasingly rare. Hybrid cloud has become the de facto reality—combining local infrastructure with public cloud platforms to balance flexibility, scalability, and compliance requirements.

At its foundation, cloud computing is defined by five key traits: on-demand self-service, broad network access, resource pooling, rapid elasticity, and measured service. These capabilities make it possible to dynamically scale resources, support diverse devices, and pay only for what is consumed.

However, the shift from on-premises to hybrid and cloud brings with it a heightened focus on security. Protecting data and ensuring trust across multiple environments—whether local servers, SaaS platforms, or public cloud infrastructure—has become one of the central priorities for modern IT strategy. We will explore these security considerations in greater detail as we progress in this section.

Services in the cloud are generally delivered in three layers.

- Software as a Service (SaaS) provides fully managed applications such as Microsoft 365, Gmail, or Salesforce, where users simply consume the application without managing the underlying infrastructure.

- Platform as a Service (PaaS) gives developers an environment to build and deploy applications without worrying about the operating system or servers, with examples such as AWS Lambda or Google App Engine.

- Infrastructure as a Service (IaaS) offers the most flexibility by allowing organizations to rent virtualized infrastructure such as servers, storage, and networking, while maintaining control of the operating systems and applications themselves. Examples include AWS EC2 and Microsoft Azure virtual machines.

Cloud computing can also be deployed in four different models.

- A private cloud is dedicated to a single organization and may be managed internally or by a third party, providing the same services as public cloud but restricted to one entity.

- A community cloud is shared by organizations with common requirements, such as compliance or mission goals, though it is less common.

- Public cloud is the most widely used model and is available to the general public, with providers like AWS, Microsoft Azure, and Google Cloud Platform leading the space. Hybrid cloud is a combination of two or more models, enabling workloads to move between private and public clouds to balance scalability, cost, and flexibility.

To connect securely with public clouds, enterprises typically use private WAN services or encrypted VPN tunnels over the internet. This ensures sensitive data remains protected when moving between on-premises systems and cloud environments. Security measures such as IPSec VPNs and private connectivity options provide enterprises with confidence that their traffic is safeguarded.

The benefits of cloud computing are substantial. Organizations reduce capital expenditures by avoiding large upfront investments in hardware and data centers. Services can scale globally, with resources provisioned from locations close to users. The cloud also enables speed and agility, allowing IT teams to launch resources in minutes instead of weeks. Productivity increases as staff spend less time managing physical hardware, while reliability improves through built-in redundancy and disaster recovery capabilities.

In summary, cloud computing provides a flexible, scalable, and cost-effective way to deliver IT services. Its defining characteristics, layered service models, and deployment options give organizations the tools to meet modern demands for performance and security.